Deepfakes in medicine: Opportunity or threat?

While many stories focus on the potential misuse of this technology in healthcare, I want to explore how deepfakes can be used responsibly in medicine. From my research, it is clear that synthetic data is a powerful tool for education, research, and direct patient care.

What are medical deepfakes?

A medical deepfake is a highly realistic yet entirely synthetic image, video, or audio clip created by AI.

While general deepfakes first gained attention on Reddit in 2017 and later found their way into entertainment apps like Synthesia and Reface, medical deepfakes serve a distinct purpose in healthcare.

Modern AI can generate remarkably realistic medical imagery - from synthetic brain scans to simulated patient case videos - nearly indistinguishable from authentic content. Though this technology initially sparked concerns about misuse, healthcare innovators now harness its capabilities for beneficial applications.

While entertainment deepfakes like Nicolas Cage as Neo gained early attention, medical applications present more nuanced considerations.

At the core of deepfake technology are GANs (Generative Adversarial Networks) - AI systems that pit two neural networks against each other.

One network creates synthetic data, while the other judges how realistic it looks. Through this competition, they refine the output until it becomes virtually indistinguishable from real data.

Medical GANs can create synthetic patient data that maintains statistical accuracy while safeguarding privacy. They provide realistic training scenarios for medical students and support the development of more accurate diagnostic tools. The key to success lies in training these networks with high-quality medical datasets and implementing rigorous validation processes.

How deepfakes are being used in medicine

Next-generation medical training

Perhaps the most promising application lies in medical education.

The availability of real patients with specific conditions has always constrained traditional training. Synthetic patients created through deepfake technology now allow medical students and professionals to practice diagnosing and treating rare diseases without risk to actual patients.

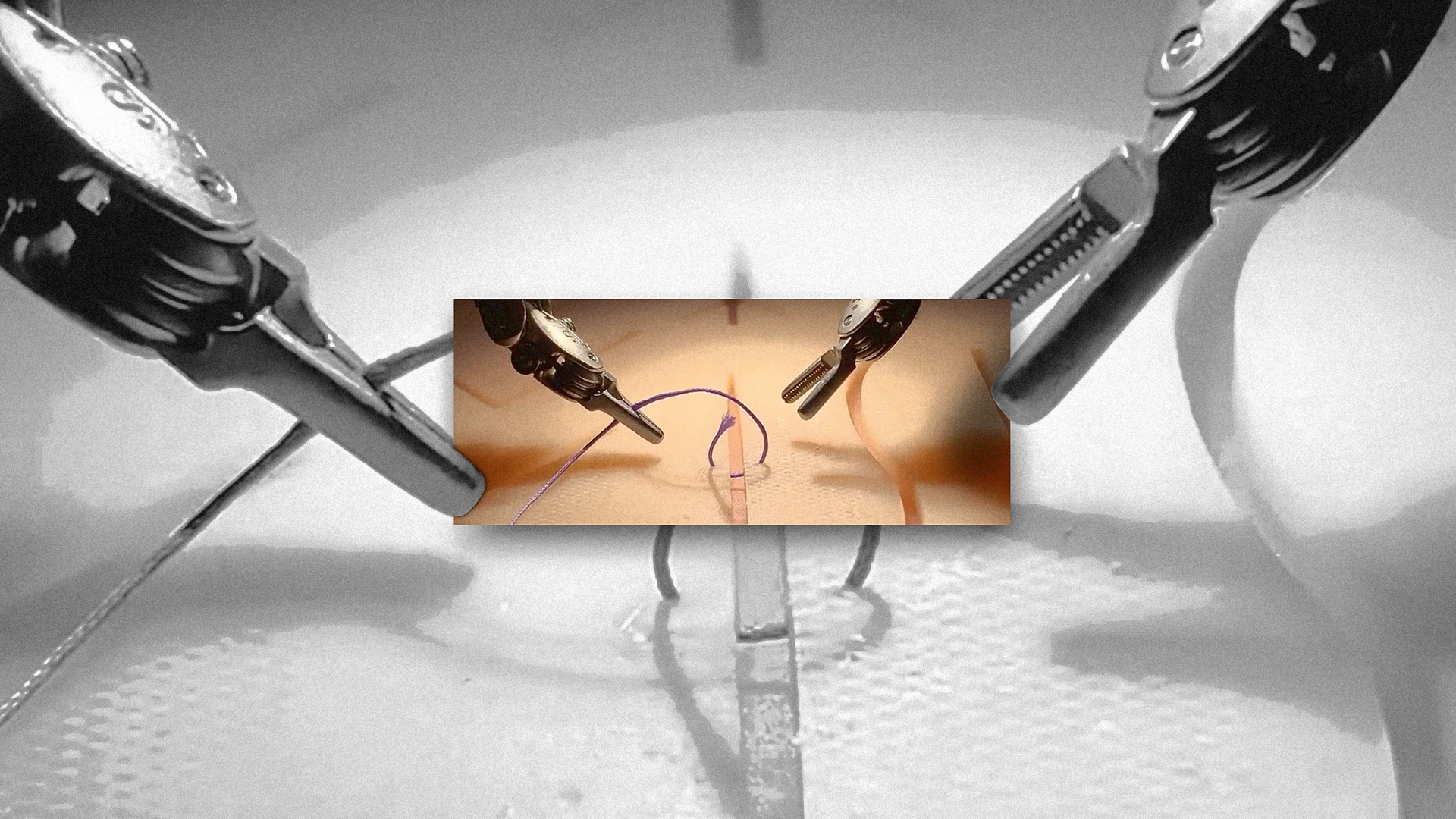

At Johns Hopkins Medicine, they taught an AI to conduct surgery by training robots on both real and simulated training data. The deepfake data ensured the AI could adapt to new and unseen surgical scenarios before proceeding to test it in physical procedures. It's particularly brilliant for rare conditions that many doctors might otherwise only read about in textbooks.

Enhancing diagnostic capabilities

Imagine learning to spot life-threatening conditions not just in a few pictures but in thousands of AI-generated variations.

This technology is particularly valuable in emergency medicine, where quick and accurate diagnosis can mean the difference between life and death. Through synthetic training, emergency room physicians can practice identifying subtle signs of conditions like stroke or heart attack across diverse patient presentations, improving their ability to make critical decisions under pressure.

Protecting privacy while advancing research

Medical research has long faced a dilemma: needing extensive patient data while protecting privacy. Deepfake technology offers an elegant solution by generating synthetic medical images that maintain statistical accuracy while completely protecting identities.

Stanford Medicine's Department of Radiology has pioneered this approach, creating a database of synthetic chest X-rays that helps train AI diagnostic systems while ensuring patient confidentiality. Their system generates thousands of realistic variations showing different pathologies, enabling more robust training without compromising real patient data.

Other beneficial applications

Accelerating clinical trials through synthetic data represents a key use case I explored in a previous newsletter: Digital twins: AI-powered virtual patients, real results.

Deepfake technology also enabled David Beckham to speak nine different languages in the public health campaign "Malaria Must Die Initiative" and helped April Kerner, who has advanced ALS, continue talking with her voice through deepfake voice synthesis.

Leading companies in the field

Several innovative companies are leading this revolution:

RYVER focuses on generating high-quality synthetic radiology images to reduce bias and improve medical imaging datasets

Segmed collaborates with RYVER to combine real-world and synthetic data for creating more accurate and inclusive AI models in healthcare

Carez AI develops hyper-realistic synthetic medical imaging for diagnostic tools, enabling scalable and privacy-compliant datasets

Gretel (acquired by NVIDIA) accelerates the adoption of synthetic data across various domains, including medical imaging

⚔️ The road ahead: navigating challenges

Medical deepfake technology is a double-edged sword.

While beneficial for research and training, it poses substantial risks. Clinicians may develop "synthetic trust" by overly relying on AI-generated data. The technology can perpetuate biases, leading to inequitable care, particularly for underrepresented groups. Most concerning is the potential for malicious actors to manipulate medical images, fabricate credentials, or spread harmful misinformation. Such actions could erode trust in healthcare systems and create serious privacy and security vulnerabilities.

Moving forward requires careful navigation. Experts recommend a measured approach focusing on rigorous validation, continuous human oversight, and transparent AI implementation. By combining real and synthetic data - with ongoing evaluation - healthcare systems can improve model performance while maintaining accuracy. As this technology evolves, robust ethical frameworks and regulatory standards become crucial for protecting patient safety and healthcare integrity.

The urgency of addressing these issues is underscored by our inability to reliably identify deepfakes, with human detection accuracy averaging only 55%. While AI detection systems offer promise, they struggle to keep pace with rapidly advancing deepfake technology.

Building resilience

Healthcare organizations must invest in detection systems combining multiple approaches, from metadata analysis to inconsistency detection. Implementing verification protocols for critical communications has proven valuable, with many institutions adopting multi-factor authentication for sensitive exchanges.

Most crucially, healthcare providers need training to spot potential deepfakes, while patients require education on evaluating online medical information.

🔮 The next decade: Crystal ball view

Within 3 years

Soon, synthetic data will become as common in research as lab coats. Surgeons will plan procedures using 3D-printed organs based on deepfake-generated imagery matching their patients’ unique anatomy.

Meanwhile, the FDA will establish ground rules for this digital frontier, ensuring the technology helps rather than harms.

5 years from now

Your doctor might show you personalized visualizations of how different treatments would affect your body specifically - like having a crystal ball for healthcare decisions!

Blockchain-based verification systems will become standard, creating unalterable records of authentic medical content.

A decade ahead

Medical education might transform completely with immersive simulation environments. New doctors could train with deepfake-generated patients, having "seen" thousands of cases before treating their first real patient.

We might even see AI systems running synthetic clinical trials based on existing medical knowledge, dramatically accelerating early drug development while reducing costs and risks

Embracing the future responsibly (and excitedly!)

Like electricity or the internet before it, deepfake technology isn't inherently good or bad - its impact depends entirely on how we use it. With robust safeguards and ethical frameworks, medical deepfakes could revolutionize healthcare in ways we're only beginning to imagine.

Rather than using this advanced digital technology to edit selfies, we're applying it to save lives. While this creates remarkable opportunities for patient care, it also presents healthcare leaders with fascinating challenges to navigate.